Year recap for Music Demixer: dark theme, MIDI, ONNX

Recapping major updates to freemusicdemixer.com this year!This year, I put a lot of work into https://freemusicdemixer.com, my SaaS for music stem separation.

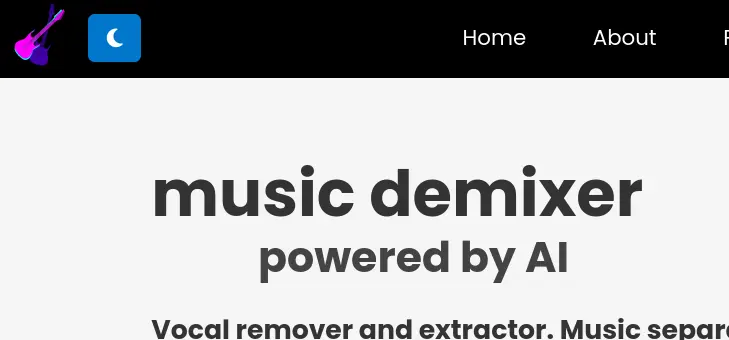

Dark theme

For selfish reasons, this year I switched my computer to a dark theme, and the fact that my own website didn't respect it and only had a light theme was annoying me. So I went ahead and implemented a dark theme.

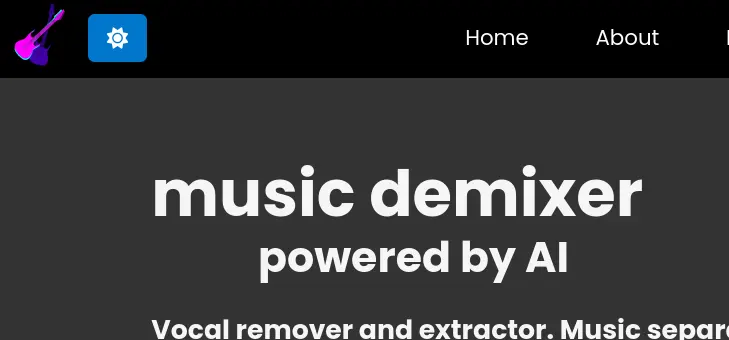

You can toggle it with the moon/sun icon in the top left (next to the logo), and you can reset the stored preference to follow the system theme in a hidden button in the login page:

CSS implementation

In my CSS file, I have a block for theme-light where I set all of the color-related properties for each class and id:

.theme-light {

body a:not(.navbar-links a):not(.footer-container a) {

color: #0000EE; /* Standard blue */

}

...

}

.theme-dark {

body a:not(.navbar-links a):not(.footer-container a) {

color: #87CEEB; /* Light blue for contrast */

}

...

}

I then duplicate these blocks under prefers-color-scheme blocks too:

@media (prefers-color-scheme: light) {

// copy-pasted from theme-light

}

@media (prefers-color-scheme: dark) {

// copy-pasted from theme-dark

}

Javascript implementation

In Javascript, I use the local storage theme key to store the user's preference. If it's not set, I use the system preference. I also have a reset button in the login settings which erases the local storage and reverts to the system preference.

// Reset theme to system preference

themeResetButton.addEventListener('click', () => {

localStorage.removeItem('theme');

loadTheme();

});

// Apply theme and update icon and label

function applyTheme(theme) {

document.documentElement.classList.remove('theme-dark', 'theme-light');

if (theme === 'dark') {

document.documentElement.classList.add('theme-dark');

themeIcon.className = 'fa fa-sun';

themeToggle.setAttribute('aria-label', 'Switch to light mode');

} else {

document.documentElement.classList.add('theme-light');

themeIcon.className = 'fa fa-moon';

themeToggle.setAttribute('aria-label', 'Switch to dark mode');

}

}

// Load theme based on system preference if no user choice is stored

function loadTheme() {

const savedTheme = localStorage.getItem('theme');

if (savedTheme) {

applyTheme(savedTheme); // Use stored preference

} else {

// Use system preference

applyTheme(window.matchMedia('(prefers-color-scheme: dark)').matches ? 'dark' : 'light');

}

}

// Toggle theme and store preference if explicitly set by user

themeToggle.addEventListener('click', () => {

const currentTheme = document.documentElement.classList.contains('theme-dark') ? 'dark' : 'light';

const newTheme = currentTheme === 'dark' ? 'light' : 'dark';

localStorage.setItem('theme', newTheme); // Store only if user toggles

applyTheme(newTheme);

});

I use Giscus for blog comments (Giscus uses GitHub discussions as a backend for blogs, it's great for static websites). I toggle the Giscus theme if the iframe is loaded:

// in theme switcher code

const isPostPage = document.getElementById('giscus-script');

if (isPostPage) {

changeGiscusTheme(theme);

}

// Function to change the Giscus theme if the iframe is loaded

function changeGiscusTheme(theme) {

function sendMessage(message) {

const iframe = document.querySelector('iframe.giscus-frame');

if (!iframe) return; // Exit if the iframe is not loaded

iframe.contentWindow.postMessage({ giscus: message }, 'https://giscus.app');

}

sendMessage({

setConfig: {

theme: theme

}

});

}

For images, I've created light/dark variants and I toggle images to the appropriate version on toggle:

if (theme === 'dark') {

// only toggle images if they're not null

if (demixImg && amtImg) {

demixImg.src = '/assets/images/music-demix-dark.webp';

amtImg.src = '/assets/images/midi-amt-dark.webp';

}

} else {

// only toggle images if they're not null

if (demixImg && amtImg) {

demixImg.src = '/assets/images/music-demix.webp';

amtImg.src = '/assets/images/midi-amt.webp';

}

}

MIDI/AMT support with Basicpitch and ONNX

Automatic music transcription (AMT) and MIDI

AMT (Automatic Music Transcription) is the task of converting musical audio into musical information (notes, tempos, sheet music, etc.). The Spotify basic-pitch model is a lightweight model which performs well on the task of AMT through conversion to MIDI.

The MIDI file format is a standard for representing musical information in a digital format. It's a great format for representing music in a way that can be easily manipulated by software.

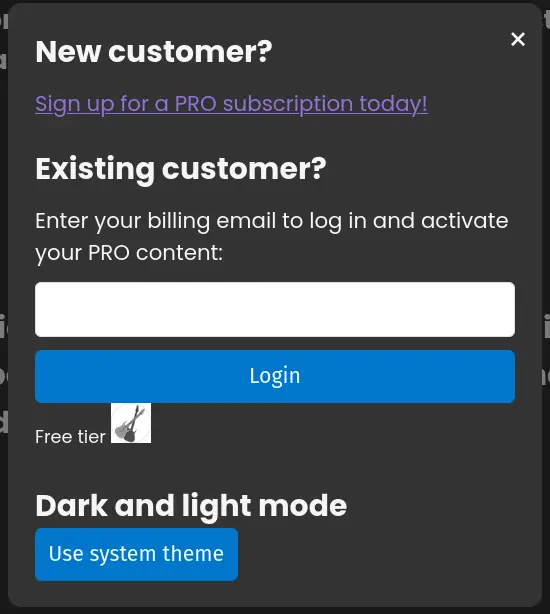

Stem separation and MIDI

Adding a MIDI feature is a great complement to stem separation. With MIDI, you can swap the timbre or instrument of a melodic line, or even change the notes of a melody.

ONNXRuntime

ONNXRuntime is a high-performance neural inference library. It specializes in inference, which is when an already-trained neural network is run to produce outputs for end-users and clients. ONNX has support for Android, iOS, WebAssembly, and all sorts of specialized architectures. If you can get your neural network represented in the ONNX format, you can have it run universally and in an optimized manner on almost any device your customers might be using.

Using the ort-builder repository, I converted the basic-pitch model to ONNX and ORT format and compiled the ONNXRuntime library with reduced runtime and operator support to create a single static WASM binary that was super easy to implement in my website.

Results

Either after stem separation or just as a standalone process, you can generate MIDI files for harmonic instruments (piano, guitar, etc.) from your uploaded audio files:

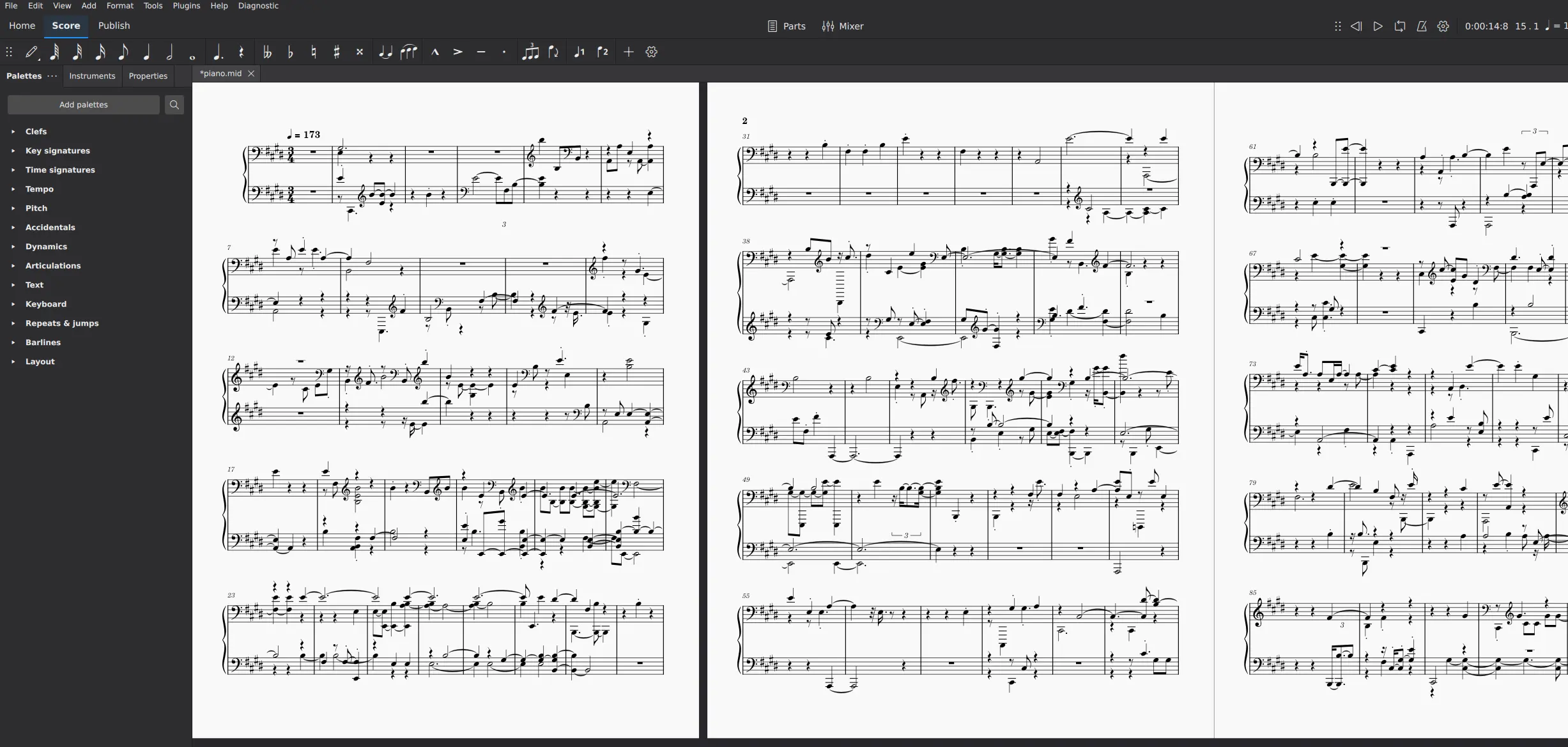

When you get the .mid file, you can load them up in your preferred MIDI software of choice (the screenshot below is from MuseScore), and start editing the notes and tempo:

As an aside, the neural network runs much, much faster on my website than it does on Spotify's online demo, probably by simply paying attention to maximizing ONNXRuntime performance.

Code on GitHub

My ONNX code for basic-pitch: https://github.com/sevagh/basicpitch.cpp

From demucs.cpp to demucs.onnx

The original implementation of the stem separation on my website was powered by demucs.cpp, a C++ library I wrote myself that implements the inference of Demucs using pure C++17 and the Eigen linear algebra library.

When I was starting out, rewriting the entire inference from scratch seemed to be the only way I could get Demucs to run in the browser/WebAssembly environment. However, after working on Basicpitch and ONNX, I realized that with some small modifications, I could run the Demucs model to ONNX and run it in the browser using ONNXRuntime.

The performance benefits would be tremendous, since ONNXRuntime is maintained by professional engineers and scientists with a lot of optimizations, while Demucs.cpp is amateur work by myself.

Demucs to ONNX strategy

In demucs.onnx, I describe how I succeed at converting Demucs to the ONNX format.

The main problem is that within the Demucs neural network itself, there is the STFT and iSTFT (Short-Time Fourier Transform and its inverse). These operations are not supported by ONNX. However, these aren't part of the learned neural network parameters, simply a deterministic operation that is used directly for the input and output of the network.

It's trivial to extract these methods outside of the network:

# old

class Demucs(nn.Module):

def __init__(self, ...):

...

def forward(self, x):

...

x = self.stft(x) # class method i.e. part of network and not supported by ONNX

...

x = self.istft(x) # class method i.e. part of network and not supported by ONNX

...

return x

# new

def stft(x):

...

def istft(x):

...

def inference_wrapper(x):

x = stft(x)

...

x = istft(x)

...

return x

class Demucs(nn.Module):

def __init__(self, ...):

...

def forward(self, x):

...

return x

Demucs.onnx integration into website

I used the same ort-builder repository to convert the Demucs model to ONNX and ORT format and compiled the ONNXRuntime library with reduced runtime and operator support to create a single static WASM binary again.

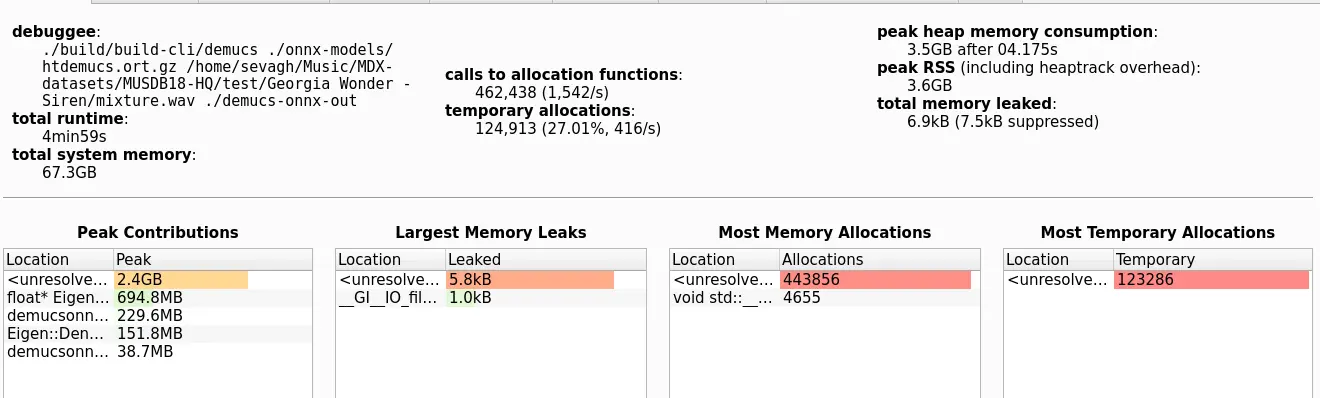

I use my favorite tool, heaptrack, for memory optimizations:

Quantization

I also quantized the neural network. Another advantage of using ONNXRuntime is the advanced quantization support, much better than my handwritten attempts. As it turns out, we can save a lot of disk space for the model by quantizing as much of the network as we can afford without affecting the audio/music quality.

ONNXRuntime quantization guide

Read through the ONNXRuntime quantization guide to understand how to quantize your neural network, including differences between INT8, UINT8, static, and dynamic quantization.

The Demucs architecture consists of convolution encoder layers, a crosstransformer, and convolutional decoder layers. By only quantizing the crosstransformer, we get the majority of disk space savings of having compressed the crosstransformer weights without affecting the audio quality at all:

quant_pre_process(str(onnx_file_path), str(onnx_file_path_tmp))

print(f"Model successfully pre-processed for quantization and saved at {onnx_file_path_tmp}")

nodes_to_quantize = []

# Print out all nodes with their names and operator types

for i, node in enumerate(model_simplified.graph.node):

print(f"Node {i}: Name={node.name}, OpType={node.op_type}")

# only quantize non-convolutional layers

#if 'Conv' not in node.name:

if 'crosstransformer' in node.name:

nodes_to_quantize.append(node.name)

# Apply dynamic quantization; try U8U8

quantize_dynamic(

str(onnx_file_path_tmp),

str(onnx_file_path),

nodes_to_quantize=nodes_to_quantize,

weight_type=QuantType.QInt8,

)

print(f"Model successfully quantized and saved at {onnx_file_path}")

One caveat for WebAssembly is that int8 operations are not likely to be faster than float32 operations, since WebAssembly is optimized for float32 operations. However, the disk space savings are totally worth it. We saved 40-50% of the model weights sizes across our 7 models, which is huge savings on internet bandwidth, load times, and better for our customers overall.

Results

4x faster and 50% smaller

The new Demucs.onnx model runs 4x faster on my website, and the weights files are 50% smaller with identical audio quality (328 MB total vs. 545 MB total)

Aside from the disk space savings, the dramatic advantage of converting demucs from my custom inference to ONNXRuntime was that it runs 4x faster, which is an almost unthinkable performance improvement.

Conclusion

There's more to come in 2025 for https://freemusicdemixer.com - stay tuned!